Overview

Today’s feed mixed cool product craft with bigger arcs in tech. A quant bot laid bare how market microstructure still leaves money on the table, design and dev tools sped up from idea to demo in minutes, and year-end reels from Tesla, NASA, and an AI platform set the tone for 2026. There is also a neat Linux install trick and a thoughtful chat on how brains learn from sparse data.

The big picture

Markets, makers, and milestones, all in a day’s scroll.

Spread arb in plain sight on Polymarket

@gemchange_ltd posted a crisp visual of a bot scooping $324k in 25 days on 15-minute BTC markets. The approach is delta-neutral, entering one leg, waiting for spread dislocation, then taking the opposite. Across 14,822 trades, entries cluster when combined spreads top 4 cents, with 8-12 minute holds and 4-16% per trade on positions priced around 84-96 cents. It is a tidy case study in retail prediction market inefficiencies and microspread timing.

AI-made site in under 20 minutes

@eshaanpawan showed a Runable-built “Tony Stark” portfolio with a mouse-following blob cursor that reveals a suited portrait over a black tee, smooth 300ms transitions, and hover states for readability. Prompts aim for tight alignment between two images so the reveal lands cleanly, with tools like Nanobanana Pro or GPT-4o used for the paired renders. It nods to Lando Norris’ vertical driver site, and pushes the idea that first drafts can be generated in minutes, not days.

Figma-only glassmorphism that feels physical

@designbypepo’s “Reflect” buttons are built entirely in Figma using background blur, gradients, masks, and layered rectangles to fake refraction and depth. Replies unpack tricks like high refraction looks at low depth and pattern fills for colour shifts. It shows how far interactive prototyping in Figma has come without code.

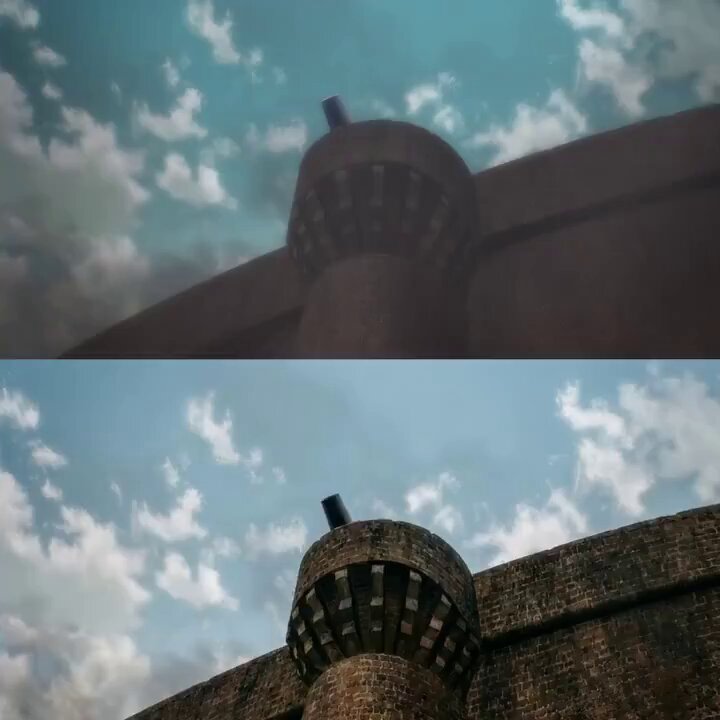

Blender retopology that is satisfying to watch

@3DxDEV7 highlighted Jan van den Hemel’s timelapse that morphs a cube into an animation-ready hand. It uses hundreds of Shape Keys to move through modelling stages and Ian Hubert’s Camera Shakify for subtle motion, keeping the focus on clean edge loops and deformation-friendly topology.

Tesla’s 2025 in review, eyes on 2026

Tesla’s montage claims 9 million vehicles produced, record Megapack deployments topping 31 GWh for the year, and Optimus moving into factory tasks. Model 2 reached volume production in June, Cybertruck led U.S. pickup sales by their count, and FSD offered unsupervised rides in select regions. The teaser points to Robotaxi news next year after a tough EV market.

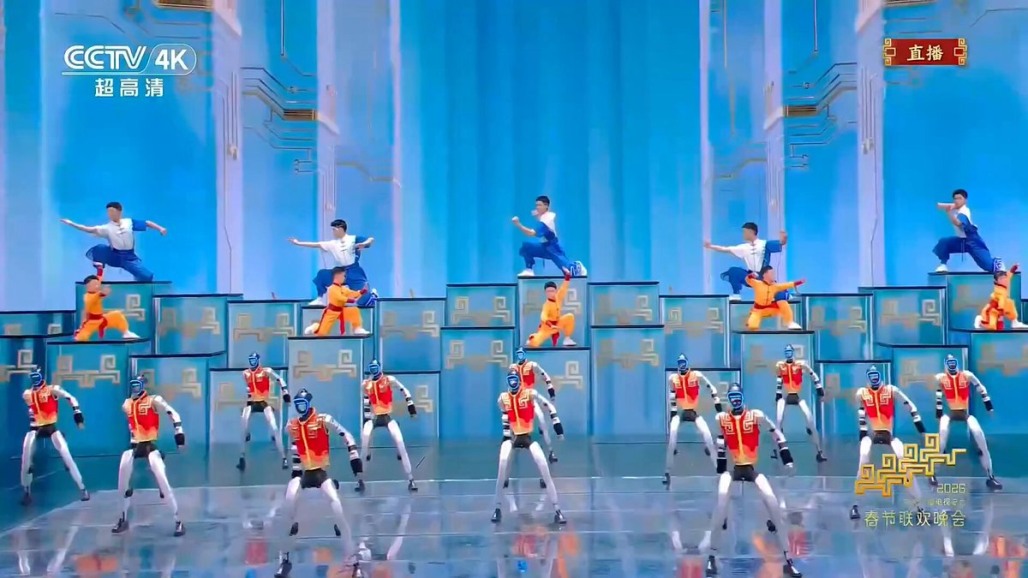

NASA’s year-end reel and Artemis II prep

@NASA recapped launches, astronaut training, Mars rover work, quiet supersonic tests, and solar to deep space science. The note looks ahead to the Artemis II lunar flyby, framed as groundwork for future Moon landings and eventual Mars plans, with a chorus of international partners in the wings.

ImagineArt’s creator numbers and model updates

@ImagineArt_X marked 3x growth from 2024, 300k creators, and 30M+ users. The reel mentions ImagineArt 1.5 and KLING 2.6 for text-to-video, plus 51M+ generations. The message is simple, show up, ship, and rally a global creative base around a single suite.

Install Linux from Windows with a click

@tpm_28 answered a question about distro support while showing their Windows .exe that handles ISO download, partitioning, and install for Linux Mint, no USB stick required. It lowers the entry bar for dual-boot setups and has drawn growing interest since mid-2024.

How brains learn from little data

@dwarkesh_sp’s episode with Adam Marblestone argues that sample efficiency comes from rich, evolution-shaped reward systems and curricula in subcortical circuits, while cortex stays general-purpose. That view ties back to Ilya Sutskever’s question about how genomes encode drives for high-level concepts, and points to connectomics and cell-type atlases as keys for the next AI leap.

Why it matters

The Polymarket bot makes a blunt point, liquidity is noisy, spreads vary, and careful timing still earns in short horizons. That is a lesson for any retail trader tempted by narrative over mechanics.

The maker posts show a clear pattern, tools across Runable, Figma, and Blender now support fast, high-fidelity drafts. The time saved moves pressure to taste, structure, and QA, because the first version is easy, the last 10% still requires judgement.

Tesla, NASA, and ImagineArt use year-end reels to claim momentum, but also to set expectations. Energy storage growth, lunar prep, and creative AI at scale all point to 2026 as a year where deployment tempo, not just research, will decide winners.

The Windows .exe installer for Linux reduces friction for curious users, which can nudge adoption in places where a USB key or BIOS settings were stopping them before.

Marblestone’s take matters for AI research. If innate objectives and staged learning are the secret sauce, better maps of the brain could guide more sample-efficient systems, and give safer scaffolds for how we set goals in machines.