Overview

Today’s feed had two loud themes: developer tools that promise to tidy up messy codebases, and AI models pushing further into “do the task for me” territory. In the background, the real world kept tugging back, from robotaxis stuck in flash floods to a reminder that medicine is learned through experience, not just text.

The big picture

We are watching two races happen at once. AI assistants are getting longer memories and sharper hands inside tools people already use, like Copilot and slide builders. At the same time, there’s a growing sense that the hard problems are not in the prompts, they’re in the messy edge cases: weather, safety, healthcare, and security. The gaps are where the next arguments (and breakthroughs) will live.

React Doctor wants to be your codebase’s health check

Aiden Bai dropped React Doctor, a CLI that scans React projects for common pain points: unnecessary useEffects, accessibility problems, and prop drilling where context or composition would do a better job. The pitch is simple: run it, get a score, fix what it flags, repeat until you stop bleeding.

The more interesting bit is the workflow it hints at: automated review that feels closer to “continuous care” than a one-off lint pass. If it becomes part of CI for teams, it could change what “good React hygiene” means day to day.

Claude Sonnet 4.6 arrives with a 1M-token context window (in beta)

Anthropic announced Claude Sonnet 4.6, positioning it as a broad upgrade: coding, agent planning, long-context reasoning, computer use, and design. The headline number is the 1M-token context window in beta, which is the sort of capacity that turns “read this doc” into “hold the whole repo in your head”.

This matters less as a benchmark flex and more as a workflow change. Bigger context starts to look like fewer hand-offs between tools, fewer “remind the model” moments, and more end-to-end tasks that stay coherent.

Sonnet 4.6 rolls into GitHub Copilot

GitHub followed up with news that Sonnet 4.6 is rolling out in Copilot. Their framing is telling: “agentic coding” and “search operations” across large codebases. That is Copilot leaning further away from autocomplete and towards delegated work.

If you are the person who usually does the first pass on refactors, test scaffolding, or repo-wide hunting, this is the kind of update that could change your daily rhythm, assuming the rollout reaches you quickly.

PR review, but make it doom-scrollable

almonk pitched a TikTok-style interface for reviewing background agents’ PR suggestions: swipe through proposed changes, accept or reject, keep moving. It is half joke, half “this might actually work”, because the bottleneck is no longer generating fixes, it is humans staying awake while reviewing them.

There’s a serious product question hiding here: can you design a review flow that keeps judgement with the developer, while letting machines do the boring scanning and drafting at scale?

NotebookLM turns slide decks into a prompt-and-revise loop

NotebookLM added prompt-based revisions for slides and PPTX export. That sounds small until you remember how many people spend their week nudging decks around by hand: rewrite this bullet, tighten that title, match this tone, re-order these sections, export, repeat.

The reactions were basically, “finally”. Also, a quiet warning shot for jobs where the output is mostly well-formatted summaries on a deadline.

AI in healthcare: language is not residency

Bo Wang shared a Yann LeCun line that lands because it is obvious and still easy to forget: if text were enough to understand the world, you could learn medicine by reading. But you cannot, you need training in real settings, seeing thousands of normal cases so you can spot what is abnormal.

It is a reminder that “smart assistant” is not the same as “grounded judgement”, especially in healthcare where uncertainty and context are the whole game.

Grok 4.20 demos medical document analysis, and people are ready for it

DogeDesigner posted a demo of Grok 4.20 analysing a blood test report, translating lab values into plain English with ranges and notes. You can feel the demand: people want quick explanations, a second pair of eyes, something that reduces the intimidation factor of medical paperwork.

The sensible stance is still “use it as support, not a diagnosis”, but it is hard to ignore how fast this is becoming a default behaviour for curious (and anxious) patients.

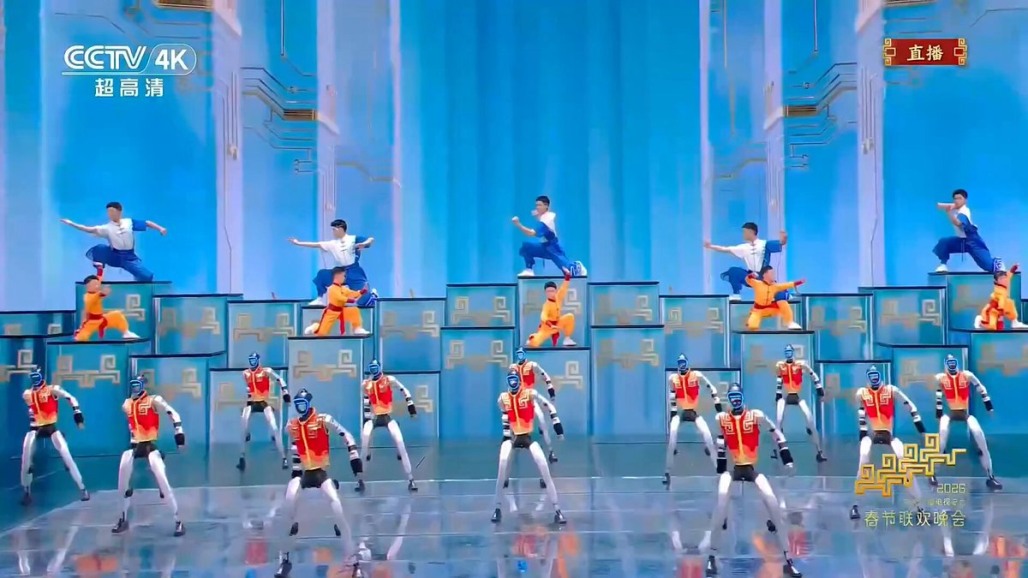

Robotaxis meet the weather, and the LiDAR argument flares again

niccruzpatane paired an Elon Musk quote about LiDAR struggling in snow, rain, or dust with footage of Waymo vehicles stalled during flash floods in LA. It is the sort of clip that gets passed around because it turns a technical trade-off into a street-level moment.

Whatever side you take on sensors, autonomy is still being judged in the conditions people actually live in, not the tidy ones in a demo.

PentAGI imagines a coordinated team of hacking agents

0xMarioNawfal highlighted PentAGI, where multiple agents split up a penetration test: recon, scanning, exploitation, then writing up the report. The scary part is not any single tool, it is the coordination and the speed, plus the idea that the system adapts as it goes.

This is also where defensive teams will have to respond in kind. If attackers can run “agent squads”, defenders will need their own.

A quiet space reminder: Artemis II runs on the people you never see

NASA posted about a podcast episode focused on the ground support crew behind Artemis II. It is a helpful counterweight to a day full of software announcements: big missions still rest on careful processes, checklists, and people doing unglamorous work under pressure.

Space news tends to orbit rockets and astronauts, but the ground teams are the ones who make “launch window” mean something real.