Overview

Today’s posts circle around two big themes: AI capability is climbing fast, and the supporting infrastructure and interfaces are starting to matter as much as the models. Alongside that, we’re seeing “tools that used to be expensive and rare” getting cheaper and more accessible, from genome sequencing to browser-based engineering simulators, with a side of space hardware scale that still stops people in their tracks.

The big picture

There’s a widening gap between what’s becoming cheap (software, prototypes, content creation, even genomes) and what’s becoming the real constraint (compute, power, integration, security, and trust). The conversation is also getting more honest: language skill is not the same as intelligence, and productivity gains can sit uncomfortably close to worries about who still feels useful in the loop.

Genome sequencing hits $100, and interpretation becomes the bottleneck

Ethan Mollick’s timeline is jaw-dropping: from hundreds of millions for the first human genome to $100 now. That changes the centre of gravity, away from producing the data and towards making sense of it, deciding what to store, and who gets access.

It also hints at a near future where whole-genome sequencing is routine, which is exciting for medicine and messy for privacy.

AI coding autonomy keeps stretching, towards a full workday

Andrew Curran’s benchmark update is a neat way to visualise progress: the “time horizon” for models to succeed on software tasks is marching upwards, with Claude Opus 4.6 landing around 14.5 hours at 50% success.

Even with noisy evaluation and wide confidence intervals, the direction is hard to ignore: autonomous work is no longer about a quick snippet, it is creeping towards something closer to a shift.

What happens when “useful” starts to feel scarce?

Shruti’s post pulls a bleak thread from a Sam Altman clip: if AI keeps outperforming people on more tasks, it is not just jobs that change, it is self-worth and power dynamics.

The most interesting tension is the double effect: concentration risk at the top, while tiny teams also get superpowers that used to belong to huge organisations.

LeCun’s reminder: language is not the whole game

Dustin quotes Yann LeCun pushing back on the idea that fluent language means intelligence. It is a useful cold shower in a week where chat and code demos are everywhere.

The underlying question is where “world understanding” comes from: text alone, embodiment, or something else entirely. Either way, it is a reminder not to confuse a convincing narrator with a system that can navigate reality.

Compute is the new megaproject, and space data centres are not it (yet)

TheHumanoidHub shares Sam Altman talking about GPU capacity for digital work and home humanoid robots as the largest and most complex infrastructure build we’ve attempted. He also dismisses orbital data centres as a non-starter in the current landscape.

Between energy, land, supply chains, and deployment speed, “just add compute” is turning into a geopolitical and industrial planning problem.

Usage time climbs: AI apps are becoming daily habits

a16z points to a simple metric that cuts through the noise: active users are spending more minutes per day in tools like ChatGPT and Claude. That suggests this isn’t just novelty adoption, it’s people finding repeatable ways to use the tools.

If the time curve keeps rising, the next fight is less about getting people to try AI and more about where, exactly, it sits in the workflow.

Palantir-style dashboards, now as a solo dev demo

Min Choi highlights a demo that looks uncomfortably close to “expensive government software”, produced with help from Claude and Gemini. Even if it’s only a prototype, it shows how fast the surface layer can be recreated.

The hard parts do not go away, security, procurement, and operational deployment, but the barrier to showing something credible just dropped again.

The interface of coding is up for grabs

Yuchen Jin argues that terminal-first AI coding tools may be a short-lived phase, moving from apps to OS-level experiences, and then to voice or glasses. It is a sensible prediction if assistants keep getting better at managing context, running software, and checking their own work.

The practical question is what people will trust: a tool that writes code, or a system that quietly rewires your environment while you talk.

AI video avatars and “all the models in one place” content stacks

Hedra is pushing longer, multi-speaker avatar videos, while Runway is bundling multiple video models into a single platform. Together, it points to a near-term reality where content creation becomes more about directing and editing choices than wrestling with tooling.

The creative upside is obvious, but it also raises the bar for authenticity checks, especially when “uncut” stops meaning what it used to.

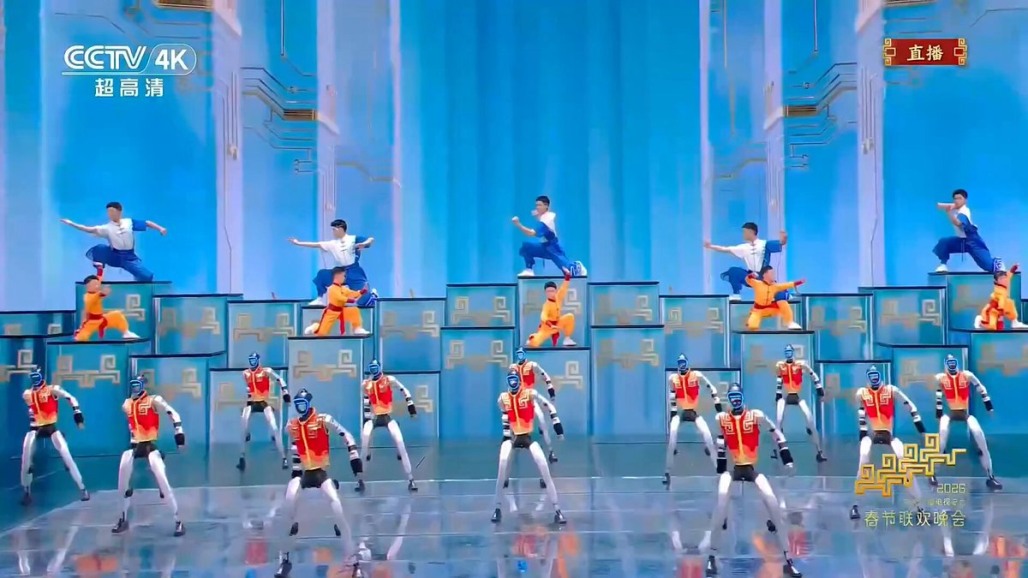

Starship scale, still startling even if you’ve seen it before

Dima Zeniuk’s clip is pure perspective: workers dwarfed by Starship’s structure. Whatever you think about timelines and politics, the raw industrial ambition is hard to shrug off.

It’s also a nice counterpoint to the rest of the feed: not everything is software, some of the future still looks like steel, towers, and launch pads.